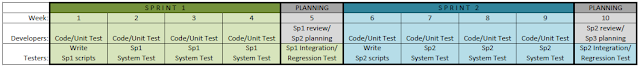

A key notion to focus on in this situation is keeping a flow going at a steady pace, and getting stories through to QA testing early on in the process.

(Note: I'm adapting some of these principles from research work done on the construction of the Empire State Building by Mary and Tom Poppendieck for their new book, Leading Lean Software Development: Results Are Not the Point):

1. Get ONE to Done:

Focus the team on one user story at a time and moving it to QA testing as soon as possible. So if you have four developers on your team, instead of having them work on four separate stories and moving all four towards QA testing at a relatively equal pace (which could get you four stories ready for QA testing at the same time and create a logjam), have them team pair up, split up tasks and get two stories, or even better just one, moving to QA. Think of automotive assembly lines, but instead of each developer building their own car, the team works on one car at a time, together, to get it complete before moving on to the next car.

2. Feed the Queue Logically:

Be sure to consult the QA testers regarding the logic of testing order for the stories in the sprint. In Sprint Planning and in the daily Standup, be sure to ask the question “which of these stories make sense to test together, or in a certain order?” It sounds like an obvious point, but if you don’t ask the question, it could be missed. Then take this into consideration in prioritizing the stories in-sprint.

3. Prioritize by Size:

We always want to work first on the stories with the highest priority per the Product Backlog. But within a sprint, IF the relative priorities of two stories are close and there's little danger of being stopped mid-sprint, and all else being equal, focus on the largest story first. There's generally (though not always) a direct relationship between the amount of development work for a story and the amount of testing required. There's usually also more unknowns, more issues, that can crop up on a larger story than a smaller one. By putting these stories into your testers' hands sooner, you give them more time to test and uncover these issues, and therefore give your developers more time to address the issues, during the sprint.

Just to illustrate the point, let's say you have five user stories in a sprint, A - E (by priority), as illustrated in the first graph below. I've applied a rule of thumb of 25% of development time required for QA. IF all else is equal, and you have the leeway to do so, prioritizing the stories by size (as illustrated in the second graph) results in a cycle of steadily decreasing testing time required for each story flowed to testers by developers:

(click to enlarge)

Also, by leaving the smaller stories to the end (the ones requiring less testing as well, per the rule of thumb), you accomplish several things. First, you lessen the lag time between when your developers complete their work and the end of the sprint. This keeps the developers from potentially “sitting around” at the end of a sprint. Also, you make it easier for QA testers to turn around testing successfully in the limited time at the end of a sprint because the testing required on those final stories should be less.

Keeping these simple guidelines in mind should help keep your sprint queue humming along like an assembly line, and make it that much easier to practice Agile with manual testing.

As always, I welcome your comments.