In Scrum (my particular flavor of Agile), we promise to deliver releasable product at the end of every Sprint (iteration). The software is considered potentially releasable based on the Team and Product Owner’s definition of “Done.” Definitions of “Done” vary greatly from team to team and project to project. At a high level, however, “Done” typically includes similar basic elements.

Here is a typical basic definition of “Done”:

Here is a typical basic definition of “Done”:

1. Code completed

2. Peer code review completed

3. Unit tests completed

4. System tests completed

5. Requirements documentation updated

6. Integration tested

7. Regression tested

Agile devotees like me will tell you that automated testing at all stages is a true key to high throughput, or velocity, on a team. When a team is developing in a four week Sprint utilizing unit tests written into the code, scriptable QA testing tools that automate regression testing, and a Continuous Integration Server that runs a build (and thus runs the unit tests) on each check-in, the amount of releasable product that can be built in a Sprint can be really impressive. Some people advise that some or most of these elements are required in an Agile adoption. However, when your team has few or even none of these automated test harness components, and procurement details put the acquiring of the necessary software and hardware beyond the foreseeable horizon, why let that stop you from adopting Agile?

The key time crunch in the cycle, as is always the case with testing, comes at the end. You want developers to be fully engaged and working, but if they were to develop through the entire four week Sprint, there would be no time left for QA to do manual Integration and Regression testing, which has to wait until all the code is completed. If you want to give the testers a full week, let’s say, to complete these tests, you might have your developers stop developing three weeks into the Sprint to give QA that fourth week. But having idle developers will rightfully cause issues with management in short order.

In Scrum, you have additional set meetings every Sprint. The Sprint review (final demo of product developed during the Sprint) can run several hours. The same is true for the Sprint Retrospective (a process retrospective around what went well, what didn’t, and what processes we want to change for the next Sprint). Sprint Planning for the next Sprint (choosing items from the Product Backlog for the next Sprint, and breaking them into development tasks) can take up an entire day. Throw in a Product Backlog Grooming meeting (fleshing out requirements further down the Product Backlog, slated for a future Sprint, and put preliminary estimates to them), and you have most of a week taken up in necessary meetings for the development team. Try to run a Sprint every four weeks, with the last day of one Sprint butted right up against the first day of the next Sprint, and you’ll find yourself cutting significantly into development time to have these meetings, or shorting the meetings themselves. Either way, you’re headed for disaster.

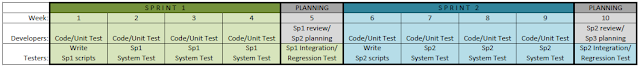

One way that I use to overcome these constraints is running a five week QA Sprint overlapping a four week development Sprint plus a planning week. That planning week between development Sprints is the time for QA to complete Integration and Regression testing while the development team participates in the planning meetings and conducts analysis to flesh out the Product Backlog. The developers are available to address issues encountered in QA’s testing if need be, without taking away from their development for the next Sprint. A typical schedule with duties might look like this:

(click the image to enlarge)

Week 1 (Sprint 1): Developers begin coding/unit testing, QA begins developing system test scripts

Week 2 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 3 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 4 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/complete Integration/Regression scripts

Week 5: PLANNING WEEK. Sprint Review/Retrospective meetings, Product Backlog Grooming meeting, Sprint 2 Planning; QA Regression/Integration testing

Week 6 (Sprint 2): Developers begin coding/unit testing, QA begins developing system test scripts

Week 7 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 8 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 9 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/complete Integration/Regression scripts

Week 10: PLANNING WEEK. Sprint Review/Retrospective meetings, Product Backlog Grooming meeting, Sprint 3 Planning; QA Regression/Integration testing

Week 2 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 3 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 4 (Sprint 1): Developers release code to system testing/code/unit test, QA system testing/complete Integration/Regression scripts

Week 5: PLANNING WEEK. Sprint Review/Retrospective meetings, Product Backlog Grooming meeting, Sprint 2 Planning; QA Regression/Integration testing

Week 6 (Sprint 2): Developers begin coding/unit testing, QA begins developing system test scripts

Week 7 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 8 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/refining scripts

Week 9 (Sprint 2): Developers release code to system testing/code/unit test, QA system testing/complete Integration/Regression scripts

Week 10: PLANNING WEEK. Sprint Review/Retrospective meetings, Product Backlog Grooming meeting, Sprint 3 Planning; QA Regression/Integration testing

Combine this strategy with a “Release Sprint”, where you’ll conduct those activities more appropriate to a production release, such as User Acceptance Testing, a full end-to-end regression test, and any other reviews or activities more appropriate to a release cycle than a development Sprint. Overlay the release iteration on top of another development Sprint, if appropriate, to keep your team developing new features. Plan a lighter workload for that Sprint so that you have bandwidth available to address issues found in, say, UAT, as a top priority. If release testing goes well and the team finds itself with available bandwidth towards the end of that Sprint, pull in additional items off the Product Backlog to fill the Sprint.

As I say, this is one way to implement Agile in an all-manual testing universe. I’d be interested to hear your experiences with similar challenges. As always, I welcome your comments.

Hi David,

ReplyDeleteIn our latest installment of our webcast This week in testing, we talked about your post. I invite you to check it out, comment and if you really like what you see, and want to see more, spread the word.

Gil Zilberfeld, Typemock

Gil-

ReplyDeleteThanks for the mention! It is an interesting webcast, I will spread the word.

Yes, the method I describe does work. It's not enacting waterfall, though. It's a way to implement Agile if you have the constraint of manual testing, i.e. no available test automation. And it's important to note that I'm not talking about testing one iteration behind development, I'm talking about testing at the same time with just an overlap into my planning week to accommodate the realities of manual testing and still be able to implement Agile. I'll update this post with a graphic to illustrate this better.

David,

ReplyDeleteI was referred to this post by Argy and it's a good read. I do have a question for you, how do you propose to implement automation scripts into the iteration when manual system testing hasn't been completed. You are familiar with the types of automation we do but I'm not sure I agree that automation scripts should be started until system testing of a particular component has been manually tested.

Also, what is your definition of Unit tests and what is your expectation from a developer when they say I have developed Unit tests and the code/feature is ready to be turned over to QA?

We are really grateful for your blog post. You will find a lot of approaches after visiting your post. I was exactly searching for. Thanks for such post and please keep it up. Great work. accelq

ReplyDelete